tl;dr

We’ve built a prototype to show how we could interact with the Internet using a command-driven approach.

- A screen reader, but one that uses machine learning and natural language processing, in order to better understand both what the user wants to do, and what the web page says.

- One that can offer a conversational interface instead of just reading out everything on the page.

It’s a proof-of-concept, but it’s an exciting idea with a lot of potential and we’ve got a demo that shows it in action.

The problem : screen readers today

I’ve written about this before but here is a recap.

Visually impaired people can interact with the web using screen readers. These read out every element on a page.

The user has to make a mental model of the structure of the page as it’s read out, and keep this in their head as they arrow-key around the page.

For example, on a news site’s front page, once the screen reader has read out the page, you have to remember if the story you want is the fifth or sixth story in the list so you can tab the right number of times to get to it.

Imagine an automated telephone menu:

“for blah-blah-blah, press 1, for blather-blather-blather, press 2, for something-or-other, press 3 … for something-else-vague, press 9 …”

Imagine this menu was so long it took 15 minutes or more to read.

Imagine none of the options are an exact match for what you want. But by the time you get to the end, you can’t remember whether the closest match was the third or fourth, or fiftieth option.

The vision : a Conversational Internet

Software could be smarter.

If it understood more about the web page, it could describe it at a higher, task-oriented level. It could read out the relevant bits, instead of everything.

If it understood more about what the user wants to do, the user could just say that, instead of working out the manual navigation steps themselves.

The vision is software that can interpret web pages and offer a conversational interface to web browsing.

Making the vision a reality

This idea came from a meeting that I wrote about last year with the RLSB : a charity for the blind.

I was convinced that we could do something amazing here. Earlier this year, I proposed it as an Extreme Blue project. It was accepted, and this summer I’ve mentored a group of interns who did amazing work on turning the vision into a prototype.

I want to talk about Extreme Blue, but I’ll save that for another post.

The prototype : what it does

We’ve developed a prototype that shows what the Conversational Internet could be like.

It is a proof-of-concept that can interpret many websites. In response to commands, it can navigate around them, and identify areas of the page, and the types of text and media. It can use this to provide a speech response with the information that the user wanted.

Commands can be typed, or provided as speech input.

a video of some of the capabilities of the prototype

The prototype : what it is

Our prototype is built using a UIMA architecture. It is made up of pipelines of discrete annotators, each using a different strategy to try and identify meaning behind areas of the web page the user is viewing.

Some of these strategies include using natural language processing (using IBM’s LanguageWare) to interpret meaning behind text.

Some use machine learning (using IBM’s SPSS Modeler) to learn from patterns in trends in the way web pages are structured and organised.

Architecture : the idea

There are two main tasks here.

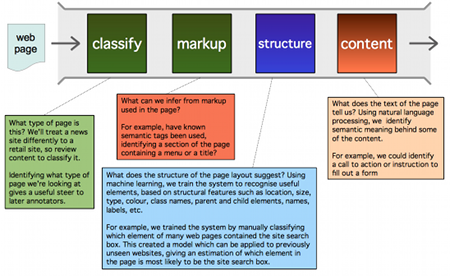

- Interpreting the web page that the user has just visited.

- Responding to commands about the page

Firstly: Understanding the web page

When the user visits a webpage, the system needs to try and interpret it.

The contents of the web page – both the text contents, and the DOM structure of the HTML markup, including any semantic tags used – go through a UIMA analysis engine. It goes through a pipeline made up of several annotators, each of which is looking for something different in the page.

By itself, no one of these strategies are fool-proof. But they’re not stand-alone applications. As elements in a pipeline, contributing a strategy to a collection of results, they’re each useful indicators. Each of the annotators in each stage of analysis contributes it’s own metadata, building up our picture of what the page contains.

Secondly: Responding to commands

Once analysed, the system is ready to react to commands about the web page.

The prototype : status

This is not a finished, fully-implemented product. It’s an early work-in-progress. It’s not yet truly general purpose, and many of the key components are hard-coded or stubbed out.

However, there are real example implementations of each of the concepts and ideas in the vision, showing that the basic idea has potential and that these technologies are a good fit for this challenge. And the UIMA pipelines are real and coordinating the work, demonstrating that the architecture is sound.

Feed footer idea nicked from 43 Folders using the FeedEntryHeader WordPress plugin.